The Jetson Nano is a nice board with a CPU and GPU in die.

The GPU runs Cuda and supports Tenor-RT.

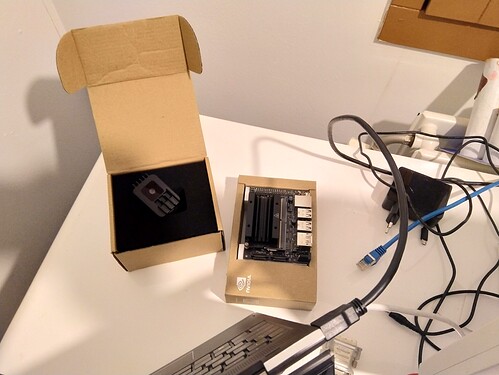

Here are the Nano next to the Oak cam.

It has a good price point, considering it’s both the baseboard and inference engine.

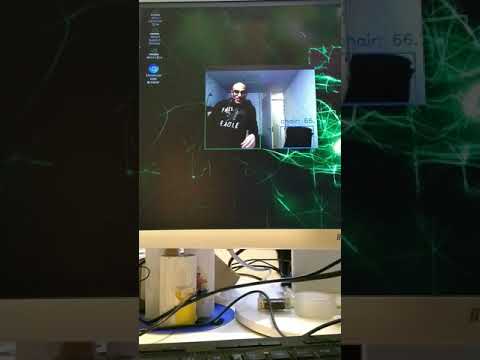

The bad is slow inference (around 11 fps), and slow startup time (around 1000 cycles) till it reaches that speed.

Here is an example result with my own model converted to Tensor-RT:

It’s nice, but in fast environments such as a car I would prefer the Oak doing the inference and the Nano acting as a central car computer for monitor output with secondary inference which isn’t mission critical (such as voice recognition).

V1= Get Nano running with my own model